ML in solar physics

generating magnetograms using supervised GANs

Over the last 100 years, the field of solar physics has seen an unprecedented amount of data being generated. Space and ground based telescopes provide long-term, continuous coverage of the sun. Naturally, with such an explosion of information, our ability to extract meaningful science is also enhanced. In parallel, the field of machine learning has also burgeoned in the last few years, allowing a harmonic amalgamation of these two fields.

The Kodaikanal Solar Observatory (KSO) has been taking daily images of the sun since 1904. Long term continuous coverage of the sun is essential in understanding the solar dynamo and studying the 22 year solar cycle. Studying this phenomenon is not only crucial in understanding solar dynamics, but also in understanding its effects on space weather and consequently, on Earth.

The project aimed to generate high resolution magnetograms from Ca-II-K images from the Kodaikanal Dataset.

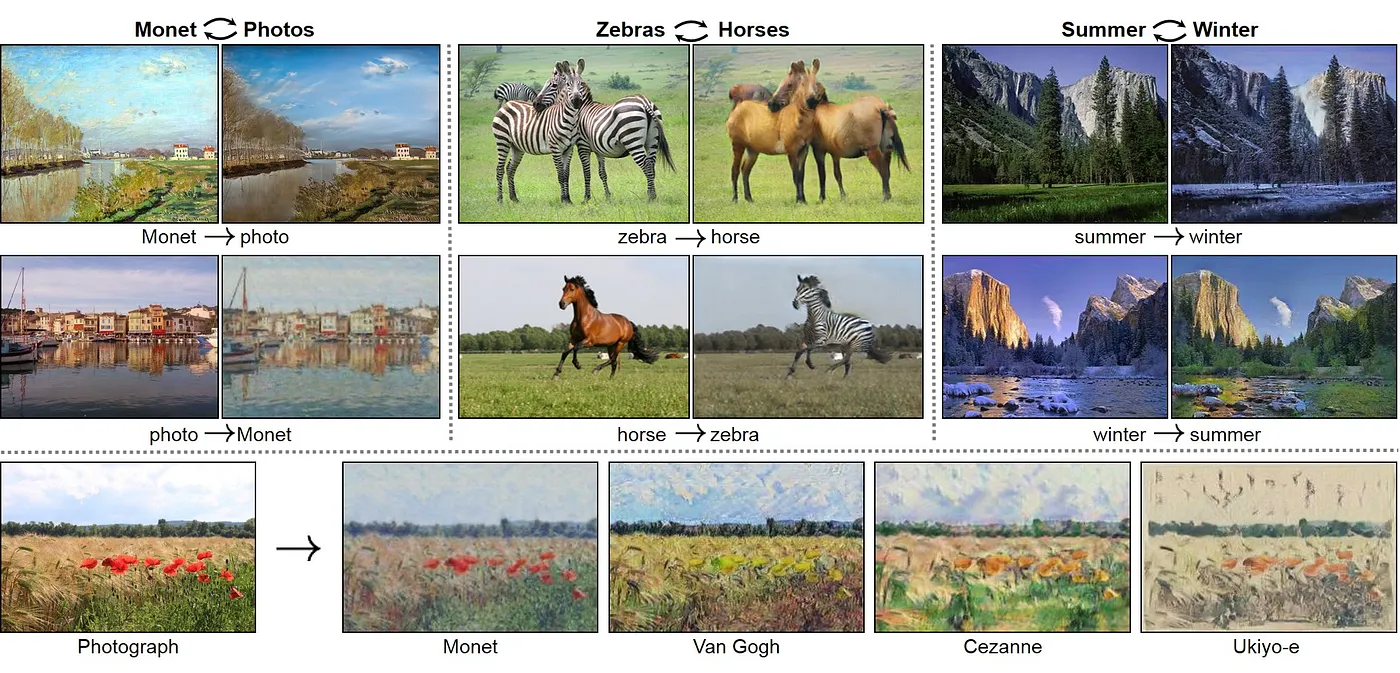

Image-to-Image translation

Image to image translation is a machine learning problem that aims to learn the relation between a set of images and their corresponding output images and using these output images for various tasks such as style transfer etc.

For our purpose, we used a supervised conditional generative adversarial network developed by Nvidia, in collaboration with UC Berkeley, called the pix2pixHD. In the following sections, I will describe these jargons. Don’t worry.

*** Side note: Nobel prize in Physics for 2024 is awarded for the development of artificial neural networks!!!

Conditional Generative Adversarial Networks

Now, the first very fundamental question that might arise is - Wha… Whattt?

Yep. Good Question.

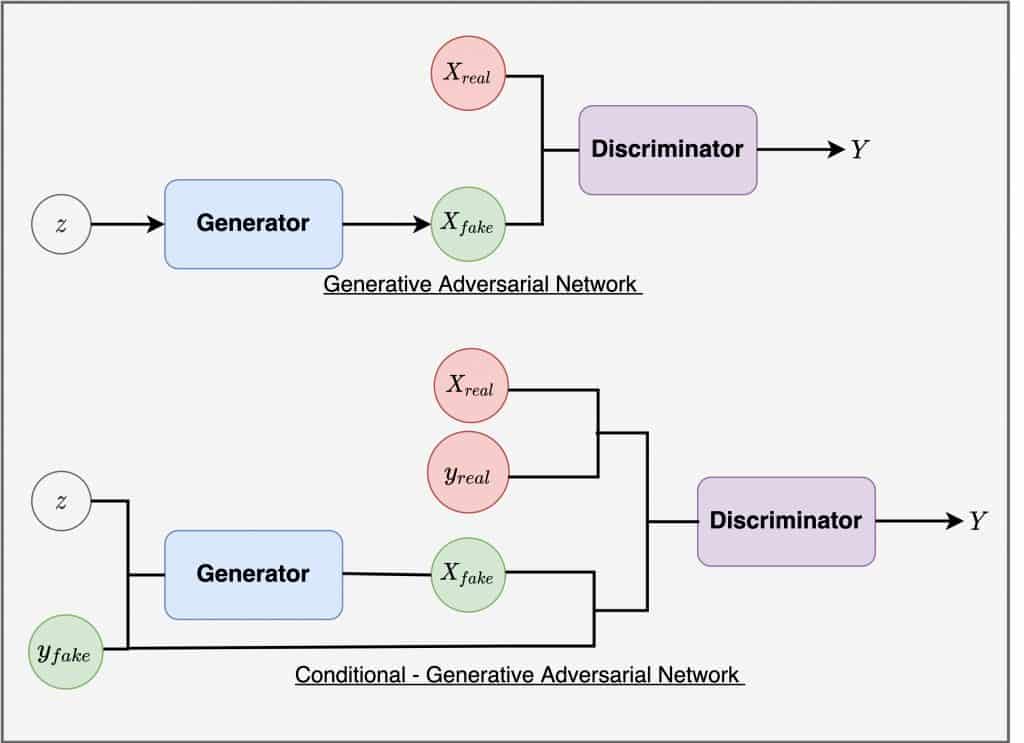

Well, cGANs basically are a type of generative adversarial networks that generate outputs based on a set of conditions. And GANs are special types of neural networks (well, two networks) that perform this task in a very special way. One of the networks, called the Generator, generates images using an input latent vector while the second network, called the Discriminator, tries to distinguish the fake image from the real one.

Now, a very special type of cGAN that we used for this project is the pix2pixHD cGAN developed by Nvidia and UC Berkeley. This is a high resolution cGAN that can generate images based on semantic maps. You can read the paper here.

How was this used for our benefit?

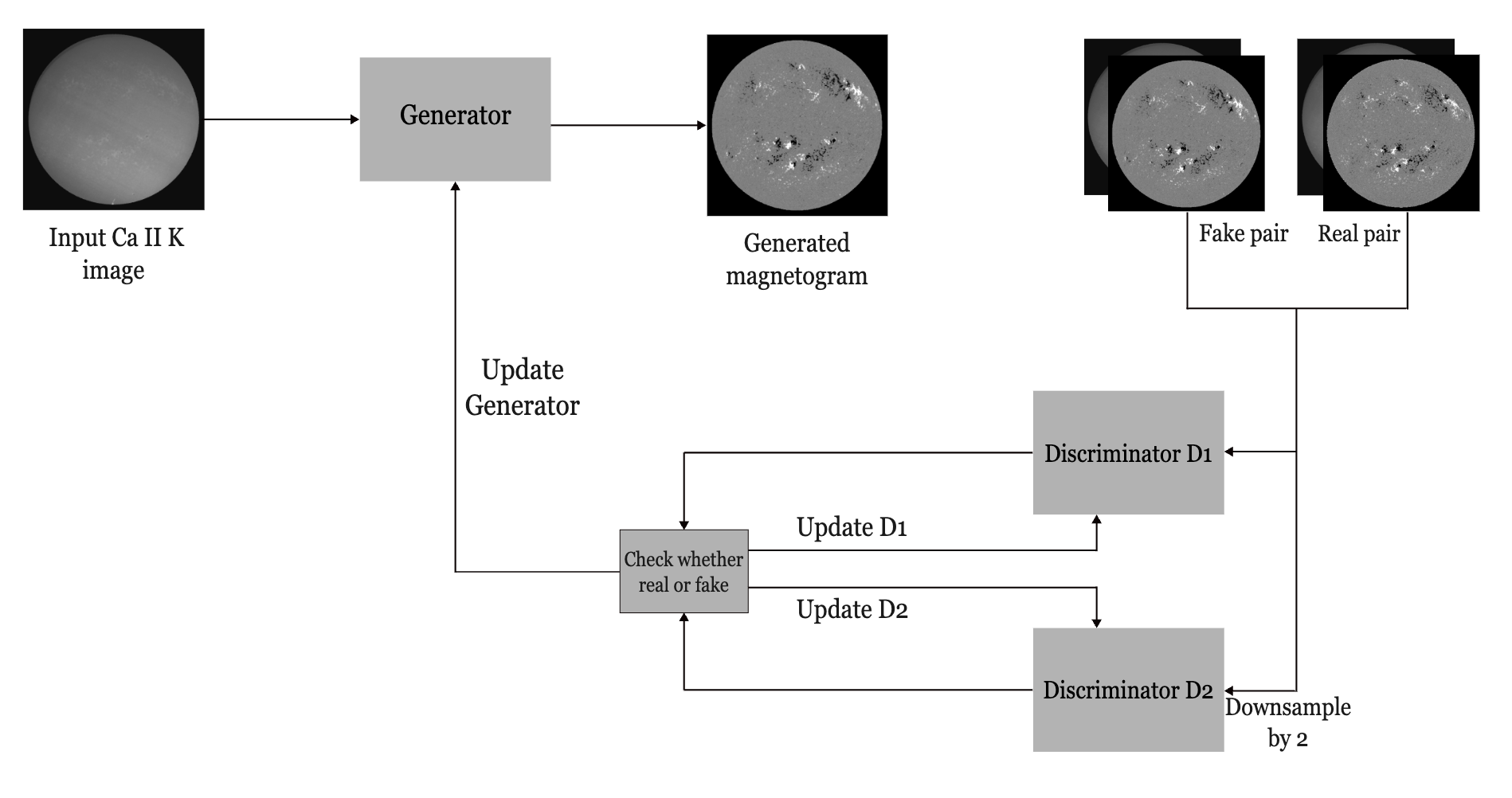

We use this high resolution network in order to translate a set of Ca II K solar images (basically the images of the sun when looked through a filter of a certain wasvelength). This Ca II K line is found to be a close proxy to the unsigned magnetic field in the sun. The figure below shows a schematic of how this network aims to translate these images into magnetograms.

Using this technique, the network was able to learn the relationship between Ca II K images and magnetograms and could generate realistic magnetograms given a random Ca II K image. But of course this is far from perfect, since Ca II K images do not have information about the polarity of magnetic features. This means that the polarity needs to be assigned manually. For each observed magnetic feature, we assign the polarity based on the solar cycle. So alternating solar cycles will have alternating leading polarities (Leading polarity means the polarity of the active region that is in the direction of the solar rotation, and we also know that the sign alternates every solar cycle, which is referred to as Hale’s Law).